Let’s understand how to use the Azure IoT services, by taking the example of the connected car use case we discussed earlier. The connected car device manufacturer ties it up with Microsoft Azure platform for using its cloud services to realize the various use cases we discussed in connected car section using the Azure platform.

Hardware And Connectivity

The connected car device manufacturer takes care of hardware devices and network provisioning (using GSM module or using internet connectivity via Bluetooth or WI-FI from Smartphones). The device manufacturer provides reliable connectivity and optimum network utilization (2G/3G/4G LTE). To communicate with the Azure platform, the device comes pre-installed with the connectivity code to the Azure platform using the Azure Device SDKs.

The pre-shipped device comes up with the highest level of security, both on the hardware and software side and a set of unique codes (device ids) with ensures only authorized devices can talk to Azure platform. The device manufacturer has provisioned all the devices (as part of its device design and provision step) using Azure Device Management APIs, which are exposed as HTTP REST endpoints. The device manufacturer also implements commands like pause, start, stop, diagnose device which can be controlled through the IoT Hub. The device software uses the Azure Device SDK to transmit the data from the connected car securely to the Azure IoT platform using JSON format over AMQP protocol. The device also provides a display unit which is used to display usage information and communication from Azure IoT platform.

Using Azure IoT Services

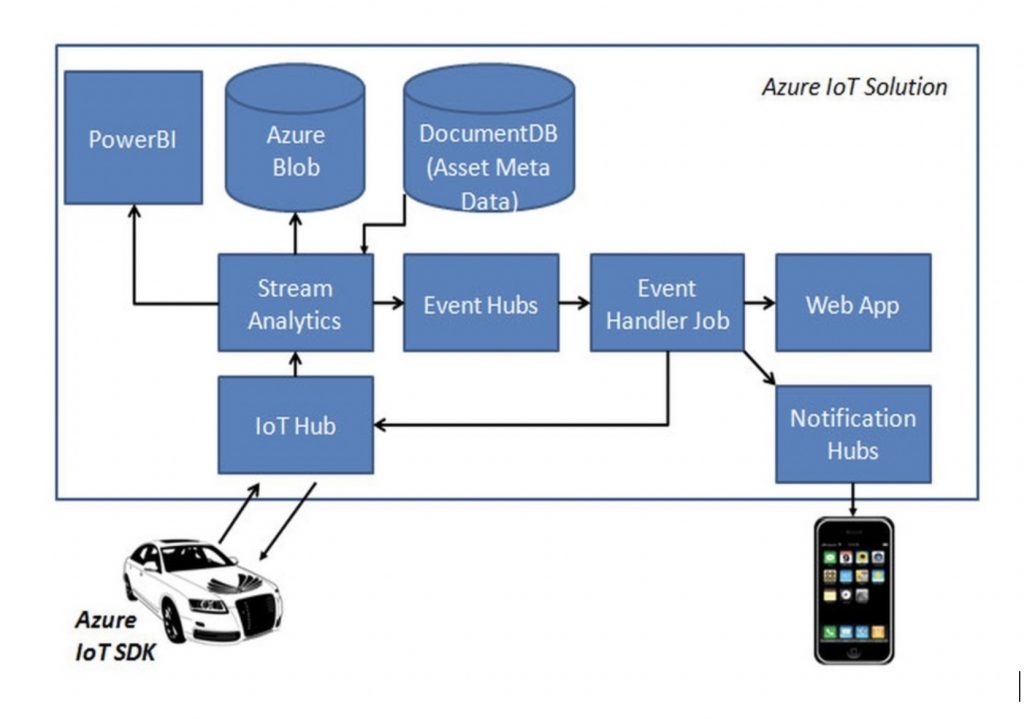

The solution strategy comprises of using the Azure IoT services we described earlier to build the connected car IoT application.

In order to receive messages on Azure platform and eventually start processing the same, there are a bunch of activities that needs to be done. Our solution uses two approaches to process the incoming data – real-time and batch analysis. The real-time approach processes the continuous stream of data arriving at IoT Hub from devices that includes taking the required action at runtime (like raising an alert, sending data back to devices or invoking a third party service for maintenance order), while batch analysis includes storing the data for further analysis and running complex analytics jobs or using existing Hadoop jobs for data analysis. The batch analysis would also be used for developing and training the machine learning models iteratively and then using these deployed models at runtime for real-time actions.

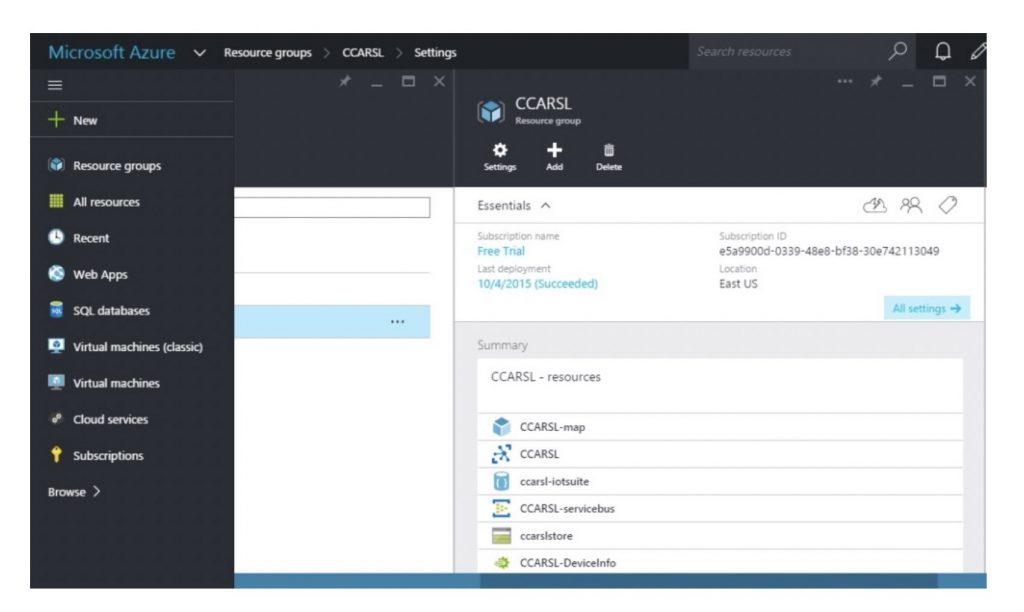

The following image shows the Azure Management portal where a set of task needs to be executed.

The following are the high-level steps that need to be performed in Azure Management Portal

- Create Resource Group

- Create an IoT Hub

- Create Device Identity

- Provision Hardware devices

- Create Storage Service

- Create Azure Stream Analytics Jobs

- Create Event Hubs

- Create PowerBI dashboards

- Create Notification Hubs

- Create Machine Learning (ML) model

We had discussed all of the above capabilities in earlier article, except the Resource Group. A Resource Group basically is a container for all resources related to a specific application, which uses the same subscription information and is hosted in the same location. We create one resource group for the connected car, and all the resources would use this resource group. We would not go over the configuration steps in detail, but summarize one execution flow for the connected car use case which uses the above resources.

Real-time Flow

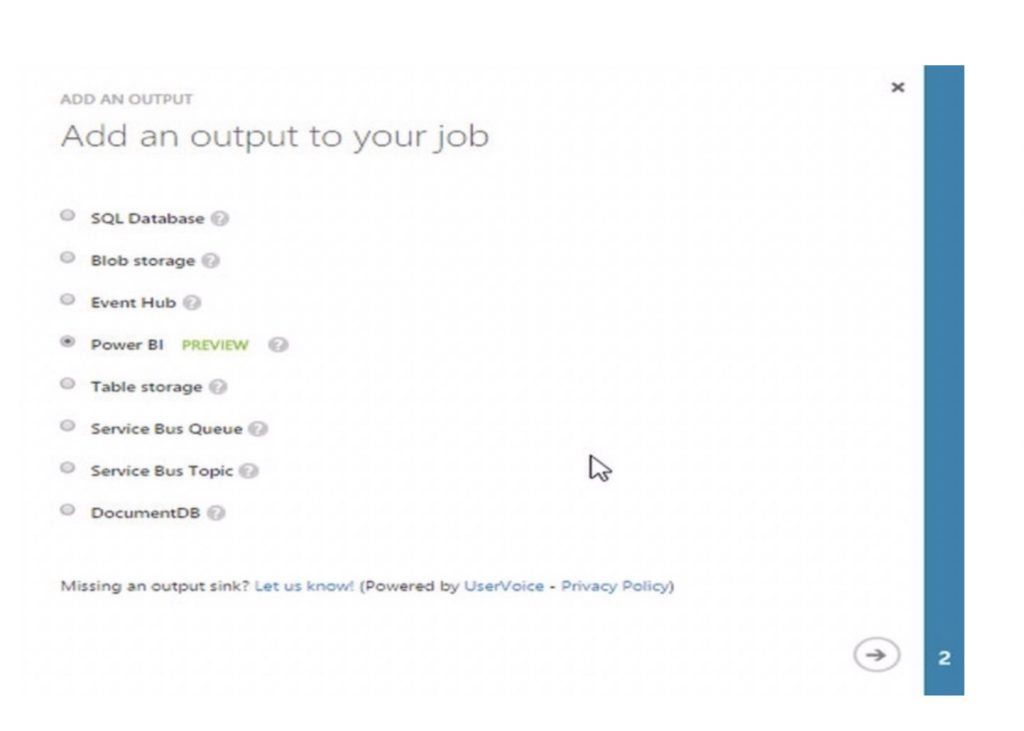

The IoT Hub receives the data from the connected car device over AMQP protocol. Once the data is received, the stream of data is consumed by Azure Stream Analytic jobs. As part of configuring the Azure Stream Analytics job, you specify the input source as IoT Hub and specify the input format (JSON) and encoding (UTF-8). This would stream all data from IoT Hub to this Azure Stream Analytics job. As part of output configuration, you specify where you want to store the output of the job, for instance, Blob Storage, Event Hubs, PowerBI, etc. The following image shows the list of output options:

The IoT Hub receives the data from the connected car device over AMQP protocol. Once the data is received, the stream of data is consumed by Azure Stream Analytic jobs. As part of configuring the Azure Stream Analytics job, you specify the input source as IoT Hub and specify the input format (JSON) and encoding (UTF-8). This would stream all data from IoT Hub to this Azure Stream Analytics job. As part of output configuration, you specify where you want to store the output of the job, for instance, Blob Storage, Event Hubs, PowerBI, etc. The following image shows the list of output options:

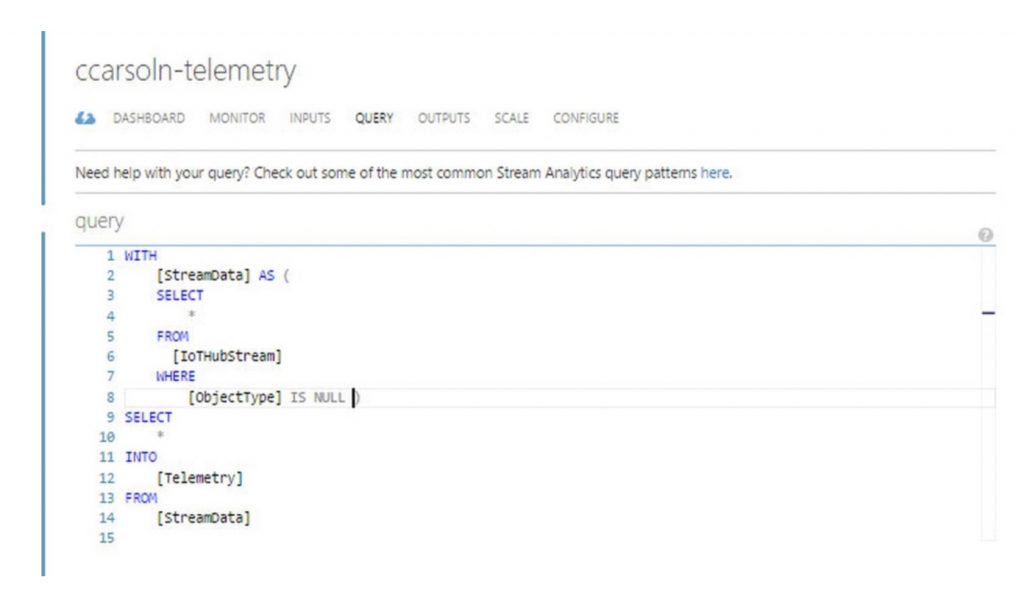

In the Query Tab on the Azure Stream Analytics job, you specify the query (SQL-like queries), which works on the input data and produces the output. The output (in JSON format) is delivered to the output channel.

For our connected car scenario, we create two Azure Stream Analytics jobs. For the first job, we specify the input as IoT Hub. The query is to select all the incoming data. There are two output configuration specified; one output dumps the data into Azure Blob for further analysis and the other dumps the data into PowerBI for creating a dashboard. The following image shows the snippet of Query view:

For the second Azure Stream Analytics Job, we create two inputs; the first input is IoT Hub, and other is the Asset DB, which contains the asset metadata. For the query, we create condition-based rules which trigger if conditions are not met (like speed >100 km/hour, low engine oil, low tire pressure). The Azure Stream Analytics rules correlates asset metadata and runtime data of the connected car to trigger conditions based on asset specifications. The asset specification contains the asset details and ideal permissible limit of the asset – be it car engine, tire pressure, engine oil, etc. This is simple condition based maintenance. The result from the rules is stored in output storage. The output is stored in an Event Hubs for further processing by various applications.

A custom Event Handler is created which acts as a consumer and picks up the data from Event Hubs and uses the Notification Hubs APIs to push high priority events to mobiles. The handler also sends updates to web dashboards and sends the message back to the IoT Hub device queue for that device using the device id. The connected car device receives the notification on the device dashboard.

Offline Process

Now, let’s discuss the offline process. The offline process is mainly used for batch processing, analyzing volumes of data, correlating data from multiple sources and complex data flows. The other scenario is developing machine learning models from these various data sets, training, and testing iteratively to build models which can predict or classify with reasonable accuracy.