The Analytics Platform layer provides a set of key capabilities to analyze large volumes of information, derive insights and enable applications to take required action.

Stream Processing

Real-time stream processing is about processing streams of data from devices (or any source) in real-time, analyze the information, do computations and trigger events for required actions. The stream processing infrastructure directly interacts with the IoT Messaging Middleware component by listening to specified topics. The stream processing infrastructure acts as a subscriber, which consumes messages (data from devices) arriving continuously at the IoT Messaging Middleware layer.

A typical requirement for stream processing software includes high scalability, handling a large volume of continuous data, provide fault tolerance and support for interactive queries in some form like using SQL queries which can act on the stream of data and trigger alerts if conditions are not met.

Most of the big data implementations started with Hadoop which supported only batch processing, but with the advent of various real-time streaming technologies and changing the requirement of dealing with massive amount of data in real-time, applications are now migrating to stream based technology that can handle data processing in real-time. Stream processing platform like Apache Spark Streaming enables you to write stream jobs to process streaming data using Spark API. It enables you to combine streams with batch and interactive queries. Projects already using existing Hadoop-based batch processing system can still take the benefit of real-time stream processing by combining the batch processing queries with stream based interactive queries offered by Spark Streaming.

The stream processing engine acts as a data processing backbone which can hand off streams of data to multiple other services simultaneously for parallel execution or to your own custom application to process the data. For instance, a stream processing instance can invoke a set of custom applications, one which can execute complex rules and other invoking a machine learning service.

Machine Learning

Following is the Wikipedia definition of Machine Learning –

“Machine learning explores the study and construction of algorithms that can learn from and make predictions on data.”

In simple terms, machine learning is how we make computers learn from data using various algorithms without explicitly programming it so that it can provide the required outcome – like classifying an email as spam or not spam or predicting a real estate price based on historical values and other environmental factors.

Machine learning types are typically classified into three broad categories

- Supervised learning – In this methodology we provide labeled data (input and desired output) and train the system to learn from it and predict outcomes. A classic example of supervised learning is your Facebook application automatically recognizing your friend’s photo based on your earlier tags or your email application recognizing spam automatically.

- Unsupervised learning – In this methodology, we don’t provide labeled data and leave it to algorithms to find hidden structure in unlabeled data. For instance, clustering similar news in one bucket or market segmentation of users are examples of unsupervised learning.

- Reinforcement learning – Reinforcement learning is about systems learning by interacting with the environment rather than being taught. For instance, a computer playing chess knows what it means to win or lose, but how to move forward in the game to win is learned over a period of time through interactions with the user.

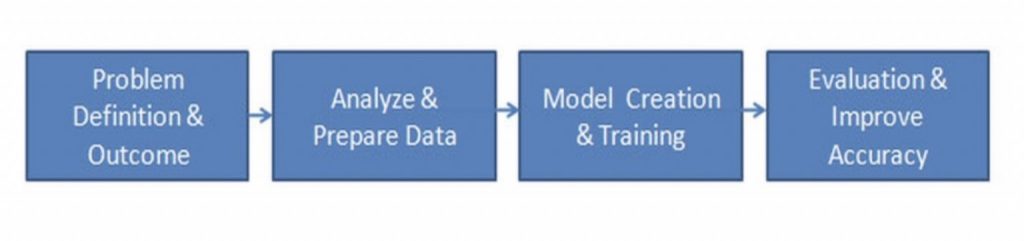

Machine learning process typically consists of 4 phases as shown in the figure below – understanding the problem definition and the expected business outcome, data cleansing, and analysis, model creation, training and evaluation. This is an iterative process where models are continuously refined to improve its accuracy.

From an IoT perspective, machine learning models are developed based on different industry vertical use cases. Some can be common across the stack like anomaly detection and some use case specific, like condition based maintenance and predictive maintenance for manufacturing related use cases.

Actionable Insights (Events & Reporting)

Actionable Insights, as part of Analytics Platform layer, are set of services that make it easier for invoking the required action based on the analyzed data. The action can trigger events; call external services or update reports and dashboards in real-time. For example, invoking a third party service using an HTTP/REST connector for creating a workflow order based on the outcome of the condition based maintenance service or invoking a single API for mobile push notification across mobile devices to notify maintenance events. Actionable insights can also be configured from real-time dashboards that enable you to create rules and actions that need to be triggered.

The service should also allow your application custom code to be executed to carry out desired functionality. Your custom code can be uploaded to the cloud or built using the runtimes provided and integrate with rest of the stack through the platform APIs.